If we want machines to think, we need to teach them to see.

Feifei Li - Director of Stanford AI Lab and Stanford Vision Lab

Computer vision is a science that studies how to make a machine “seeâ€. Further, it refers to the use of cameras and computers instead of human eyes to identify, track and measure machine vision, and further image processing. The computer is used to process images that are more suitable for human eye observation or instrument detection.

Learning and computing allow the machine to better understand the picture environment and create a truly intelligent vision system. There is a large amount of picture and video content in the current environment, which requires scholars to understand and find patterns in it to reveal details that we have not noticed before. The basic process of computer vision implementation is:

Computer Generates Mathematical Models from Pictures

Computer graphics draws the image in the model, then uses it as input during image processing, and gives the processed image as output

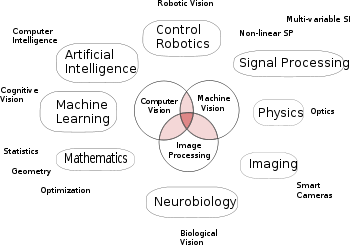

The concept of computer vision actually overlaps with many concepts in some aspects, including: artificial intelligence, digital image processing, machine learning, deep learning, pattern recognition, probability map models, scientific calculations, and a series of mathematical calculations. Therefore, you can think of this article as the first step in further research in this field. This article will try to cover as much as possible, but there may still be some more complicated topics, and there may be some omissions, please forgive me.

Step 1 - Background

Generally speaking, you should have a little related academic background, such as related courses on probability, statistics, linear algebra, and calculus (differential and integral). It is better to know about matrix calculations. In addition, from my experience, if you have an understanding of digital signal processing, it will be easier to understand the concept later.

On the implementation level, you'd better use one of MATLAB or Python. It's important to remember that computer vision is almost entirely related to computer programming .

You can also take a course on the Course of Probability Modeling on Coursera. This course is relatively difficult (more in depth) and you can learn about it after a period of study.

丨 The second step - digital image processing

Watch the course taught by Guillermo Sapiro from Duke University - "Image and Video Processing: From Mars to Hollywood Image and Video Processing: From Mars to Hollywood with a Stop at the Hospital". All are independent and contain a lot of exercises. You can find relevant course video information on coursera and YouTube. In addition, you can read "Digital Image Processing" written by Gonzalez and Woods. Using MATLAB to run the examples mentioned in the article, I believe we will get something.

丨 Step 3 - Computer Vision

Once learning about digital image processing related content, the next step should be to understand the application of relevant mathematical models in various image and video content. Prof. Mubarak Shah from the University of Florida in Computer Vision can be a good introductory course covering almost all basic concepts.

While watching these videos, you can learn the concepts and algorithms used by Gatech's Professor James Hays' computer vision projects. These exercises are also based on MATLAB. Do not skip these exercises. Only in the real practice will you have a deeper understanding of these algorithms and formulas.

Step 4 - Advanced Computer Vision

If you carefully study the content of the first three steps, you can now move into advanced computer vision-related learning.

Nikos Paragios and Pawan Kumar from the Central Polytechnic Institute in Paris lectured on a Discrete Inference in Artificial Vision course in artificial vision. It can provide relevant mathematical probabilistic models and computer vision-related mathematical knowledge.

Looking at this step up to now is more interesting. This course will give you a sense of how complex it is to build a machine vision system with a simple model. After completing this course, I took a big step before I reached academic papers.

丨 Step 5 - Introducing Python and Open Source Framework

This step we have to come into contact with the Python programming language.

There are many related expansion packs like OpenCV, PIL, vlfeat for Python, and now is the best time to apply these extensions to your project. Because if there are other open source frameworks, it is not necessary to write everything from scratch.

If you need reference materials, you can consider Programming Computer Vision with Python for Programming with Python. Using this book is enough. You can try it out and see how MATLAB and Python combine to implement your algorithm.

Step 6 - Machine Learning and CovNets (Convolutional Neural Networks)

There is so much information on how to learn from scratch, and you can find a lot of related tutorials online.

It is best to use Python for programming from now on. You can read the book "Building Machine Learning Systems with Python with Python" and "Python Machine Learning - Python Machine Learning".

At present, deep learning is very popular and you can try to learn the application of convolutional neural networks in computer vision. Here we recommend Stanford's CS231n course: Convolutional neural networks for visual recognition.

丨 Step 7 - How to go further

At this point, you may feel that you have already talked too much and that you need to learn too much. However, you can further explore.

One of the methods is to look at a series of seminars held by Sanja Fidler and James Hays of the University of Toronto to help you understand the latest concepts in computer vision research.

Another kind of academic paper that follows the top academic conferences such as CVPR, ICCV, ECCV, and BMVC (it is also possible to pay attention to relevant reports of Lei Fengnet (search for "Lei Feng Net" public number) ), through seminars, keynote speeches at the conference. And the schedules such as tutorial must be able to learn a lot of knowledge.

Summary : If you follow the steps to complete all the learning tasks step by step, then you will probably know about the history of filter, feature detection, description, camera model, and tracker in computer vision, and learn about segmentation and recognition, neural networks and The latest progress in deep learning. I hope this article will help you to go further in computer vision and learn more.

PS : This article was compiled by Lei Feng Net exclusively and refused to be reproduced without permission!

Via kdnuggets

Displacement sensor, also known as linear sensor, is a linear device belonging to metal induction. The function of the sensor is to convert various measured physical quantities into electricity. In the production process, the measurement of displacement is generally divided into measuring the physical size and mechanical displacement. According to the different forms of the measured variable, the displacement sensor can be divided into two types: analog and digital. The analog type can be divided into two types: physical property type and structural type. Commonly used displacement sensors are mostly analog structures, including potentiometer-type displacement sensors, inductive displacement sensors, self-aligning machines, capacitive displacement sensors, eddy current displacement sensors, Hall-type displacement sensors, etc. An important advantage of the digital displacement sensor is that it is convenient to send the signal directly into the computer system. This kind of sensor is developing rapidly, and its application is increasingly widespread.

Magnetic Scale Linear Encoder,Magnetic Scale Encoder,Encoder Software,Encoder Meaning

Changchun Guangxing Sensing Technology Co.LTD , https://www.gx-encoder.com